Part III-B: The Extraction Code Across Sectors

The Funnel in Finance and Platforms

Series: The Extraction Code

Part I | Part II | Part III-A | Part III–B | Part III–C | Part IV-A | Part IV–B | Part V

Paid members unlock the Weekly Intelligence Report (Case File): one real-world case mapped to the funnel, decoded line-by-line, and fully sourced.

A framework you can apply in real time: who must enter, where friction is added, who can say no, and where gain concentrates.

Subscribe Now: Introductory offer: 25% off monthly and annual paid subscriptions through Jan 23, 2026.

Finance: The Credit Trap

Required Participation

You can’t opt out of needing credit. Even if you never borrow money, you need a credit score to rent housing, get insurance, access utilities, and increasingly—get hired for jobs. Your credit score is a mandatory participation metric that determines access to survival infrastructure.

The Funnel in Action

Layer 1 - Eligibility Filters: Credit scores determine everything. But building credit requires having credit. First-time borrowers can’t get loans because they have no credit history. People who paid cash their whole lives are penalized as “credit invisible.” Immigrants, young adults, and anyone who avoided debt are treated as higher risk than people who borrowed and defaulted—because at least the defaulters have a score.

Example: You’re 25, graduated without student loans (worked full-time through college), never had a credit card (used a debit card), and paid rent in cash (roommate situation). You apply for an apartment. Rejected—no credit history. You apply for a car loan to build credit. Rejected—no credit history. You apply for a credit card to build credit. Approved—at 29.99% APR because you’re “high risk” due to lack of credit history.

The system punishes financial responsibility and rewards participation in debt infrastructure.

Layer 2 - Administrative Procedure: Credit reports are riddled with errors—yet disputing them requires navigating byzantine processes across three credit bureaus (Equifax, Experian, TransUnion) that don’t coordinate, don’t share information, and don’t fix errors unless you can prove they’re wrong (even when the burden of proof should be on whoever reported the debt).

Example: A medical debt appears on your credit report. You never received the bill—it went to an old address. You dispute it. Equifax says allow 30 days. After 30 days, they respond: “Verified as accurate.” You ask for verification documentation. They sent a letter stating that the creditor confirmed it. You ask the creditor for proof. They say they sold the debt to a collections agency. You contact the collections agency. They say they bought a portfolio of debts and don’t have the original documentation. You’re stuck in a loop. The debt remains. Your credit score drops 80 points. You’re denied housing, insurance, and employment—because of a debt you may not owe, from a creditor who can’t prove you owe it, verified by a system designed to trust creditors over consumers.

Layer 3 - Algorithmic Gatekeeping: Credit scoring algorithms are proprietary. FICO won’t disclose the exact formula. VantageScore won’t explain the weighting. Lenders use internal risk models that combine credit scores with other data—income volatility, geographic risk profiles, shopping behavior tracked through data brokers—to make lending decisions you cannot see, cannot challenge, and cannot understand.

Example: You apply for a mortgage. Denied. You ask why. The lender says your credit score was too low. You check your score—it’s 720, well above the advertised minimum of 680. You ask for details. They say the decision was based on “comprehensive risk assessment.” You request the criteria. They say it’s proprietary. You file a complaint. The lender provides a generic adverse action notice listing six possible reasons, with no indication of which ones applied to you or how much weight each carried.

You cannot fix what you cannot see. You cannot challenge what you cannot understand. You cannot appeal to an algorithm.

Layer 4 - Fragmented Responsibility: Who decided you’re not creditworthy? Not the bank—they use a FICO credit scoring model. FICO uses data from the credit bureaus. The credit bureaus get data from creditors, collections agencies, and public records. The collection agencies bought portfolios from debt buyers. The debt buyers purchased accounts from original creditors who may no longer exist.

When your credit score tanks because of an error, the bank says they didn’t generate the score. FICO says they didn’t generate the data. The credit bureaus say they didn’t originate the debt. The collections agency says they’re just reporting what they purchased. The original creditor is unreachable.

No one is responsible for the error. Everyone profits from the system.

Layer 5 - Time as Enforcement: Negative items remain on your credit report for 7 years (10 years for bankruptcy). Disputing errors takes 30-90 days per bureau. Rebuilding credit takes years of perfect payment history. But you need credit now—to rent housing, to get a job, to access emergency funds.

The timeline is designed to maximize harm. By the time you’ve cleared the error, you’ve lost the apartment, the job, the loan. By the time you’ve rebuilt your score, you’ve spent years paying predatory interest rates, excessive deposits, and penalty fees.

Layer 6 - No Human Discretion: When you call the credit bureau to dispute an error, you reach an automated system. When you finally get a human, they cannot override the report—they can only initiate a dispute, which goes to the creditor who reported it, who has a financial incentive to verify it as accurate rather than admit error. There is no human reviewer who can look at your situation and say, “This is clearly wrong—let’s fix it.” The system runs on automated verification loops that protect creditors, not consumers.

The Output

Diffuse Harm: Predatory lending. Debt spirals. Bankruptcy. Housing denial. Employment rejection (credit checks are now standard for hiring). Insurance rate increases (credit-based insurance scores). Poverty traps (low credit = high costs = more debt = lower credit).

Concentrated Gain: Banks profit from fees and interest. Credit bureaus profit from selling your data to lenders, insurers, employers, and landlords. FICO licenses its scoring model across the entire financial system. Debt collectors profit from buying worthless debt portfolios for pennies and extracting payments through credit score threats. Predatory lenders target low-credit borrowers with 400% APR loans, overdraft fees, and payday lending traps.

Platforms: The Terms of Service Trap

Required Participation

You cannot opt out of digital platforms and remain functional in modern society. Employment increasingly requires LinkedIn profiles. Communication requires email, messaging apps, and social media. Freelance work requires Upwork, Fiverr, or TaskRabbit. Selling goods requires eBay, Etsy, or Amazon. Rideshare driving requires Uber or Lyft. Food delivery requires DoorDash or Grubhub.

These platforms present themselves as optional services. But when they control access to income, communication, and commerce, they become mandatory infrastructure operating under private, unaccountable rules.

The Funnel in Action

Layer 1 - Eligibility Filters: Account creation requires agreeing to terms of service you cannot negotiate, privacy policies you cannot read (averaging 20,000+ words), and arbitration clauses that waive your right to sue or join class actions. You must provide your real name, phone number, email address, and, increasingly, government ID, biometric data, and linked financial accounts.

Example: You sign up to drive for Uber for supplemental income. The application requires: (1) driver’s license, (2) vehicle registration, (3) proof of insurance, (4) background check authorization, (5) Social Security number, (6) bank account for direct deposit, and (7) agreement to terms of service that include mandatory arbitration, no employment rights (you’re an independent contractor), acceptance of dynamic pricing controlled by Uber’s algorithm, and consent to deactivation at any time for any reason without explanation.

You cannot negotiate these terms. You cannot opt out of unfavorable clauses. You either agree, or you cannot access the platform. And if the platform controls access to income in your area (because it’s displaced traditional taxi services), you have no real choice.

Layer 2 - Administrative Procedure: Platform policies change constantly—and you’re responsible for compliance even if you never saw the update. Content moderation rules shift—algorithm ranking criteria change. Fee structures adjust. Payout terms are modified. And all of this happens via brief email notifications, buried interface updates, or silent policy changes with no announcement.

Example: You sell handmade goods on Etsy. Etsy changes its fee structure from 5% to 6.5% transaction fees, adds a $0.20 listing fee per item, and implements a new “offsite ads” program that charges you 12-15% of sales when customers click through from Google—whether you want the advertising or not, with no opt-out unless you’ve made $10,000+ in the past year. These changes are announced via a blog post you didn’t read and an email you didn’t open. You’re now paying 20%+ in platform fees on every sale. Your profit margins evaporate. You either accept the new terms or abandon the shop where you’ve built your customer base over five years.

Layer 3 - Algorithmic Gatekeeping: Platforms use proprietary algorithms to determine what content gets seen, which accounts get promoted, who gets suspended, and what constitutes policy violations. These algorithms are trained on engagement metrics (not accuracy, not fairness, not public benefit) and optimized for platform profit (not user welfare).

Example: YouTube’s content moderation AI flags your educational video about historical events as “violent content” and demonetizes it. You appeal. The appeal is reviewed by another algorithm. Rejected. You request human review. You wait 6 weeks. A human reviewer (contractor in a developing country, paid per review, incentivized to process quickly) spends 90 seconds watching your 45-minute video and upholds the decision. You’ve lost 6 weeks of revenue. Your channel’s algorithm ranking has dropped because the video was flagged. Your audience reach has collapsed. And you still don’t know what specific policy you violated or how to avoid it in the future.

Layer 4 - Fragmented Responsibility: Who decided to suspend your account? Not the platform, they use AI for content moderation. A contractor trained the AI. The contractor used guidelines written by the trust and safety team. The trust and safety team implemented policies designed by the legal team. The legal team wrote policies to minimize the platform’s liability, not to protect users. When you appeal, the platform says it was the algorithm. The algorithm’s designers say they just implement the policy. The policy team says they interpret the terms of service. The terms of service say the platform can terminate anyone, at any time, for any reason.

No one is responsible. And your income just disappeared.

Layer 5 - Time as Enforcement: Account suspensions happen instantly. Appeals take days, weeks, or months. During that time, you cannot access your content, audience, income, or data. If you’re a rideshare driver deactivated for a false passenger complaint, you lose income immediately. Your appeal might take 2 weeks. You might get no response at all. By the time you’re reactivated (if you are), you’ve missed rent, burned through savings, or found another job—at which point the platform has successfully eliminated a driver who might have filed a complaint about wage theft or working conditions.

Layer 6 - No Human Discretion: When you contact support, you reach chatbots, then outsourced contractors reading scripts, then (maybe) tier-2 support, who still cannot override algorithmic decisions. There is no supervisor with authority to say, “This was clearly a mistake—let’s fix it.” There is no appeals process with due process. There is no neutral arbitrator. You agreed to mandatory arbitration, which means disputes go to arbitrators paid by the platform, using procedures and standards set by the platform.

You have no rights. You have terms of service.

The Output

Diffuse Harm: Income loss. Account suspensions. Content deletion. Audience destruction. Privacy violations (your data sold to advertisers, brokers, and law enforcement). Digital homelessness (when platforms are the only way to access services). Algorithmic discrimination (content moderation AI trained on biased data, producing biased outcomes).

Concentrated Gain: Platform monopolies extract value at every layer. Amazon takes 15-45% of every sale (depending on fulfillment). Uber takes 25-40% of driver fares. YouTube takes 45% of ad revenue. Facebook and Google sell your attention, your data, and your behavior patterns to advertisers. They profit from your content, your labor, and your network effects—while calling you a “user,” not a worker, and disclaiming all responsibility for your welfare.

Become an Investigative Partner. Paid members receive:Daily Intelligence Briefings - Yesterday’s news, decoded by morning. See the extraction pattern before your second cup of coffee.

Weekly Case Files - One real victim. One institutional lie. Line-by-line deconstruction with courtroom-grade sources.

Monthly Pattern Reports - Connect the dots across healthcare, finance, and policy. The 30,000-foot view.

A framework you can apply in real time: who must enter, where friction is added, who can say no, and where the gain concentrates.➤ Subscribe Now: Introductory offer: 25% off monthly and annual paid subscriptions through Jan 23, 2026.Benefits & Eligibility: The Bureaucratic Maze

Required Participation

You cannot opt out of government benefits systems if you need them to survive. Medicaid, SNAP (food stamps), TANF (cash assistance), unemployment insurance, disability benefits, housing vouchers—these are not optional services. They are a survival infrastructure for people; the market economy has failed.

And they are designed to be nearly impossible to access.

The Funnel in Action

Layer 1 - Eligibility Filters: Means-testing requirements that demand extensive documentation: pay stubs, tax returns, bank statements, asset verification, household composition details, citizenship or immigration status, work history, disability documentation, rental agreements, utility bills. Requirements change between the application and recertification. States have different rules. Counties have various interpretations. And you’re responsible for knowing all of it.

Example: You apply for Medicaid in a state that expanded under the ACA. The application requires: (1) proof of income for everyone in the household, (2) proof of residency, (3) Social Security numbers, (4) citizenship documentation, (5) asset verification (bank statements for the past 3 months), and (6) verification of any other insurance coverage. You’re working two part-time jobs with irregular schedules. One employer pays cash. The other uses a payroll app. You’re staying with family temporarily (you’re not on the lease). You don’t have a bank account (you cash checks at a check-cashing service). You’re undocumented, but your children are citizens.

You cannot provide half of what they’re asking for. And if you can’t prove eligibility, you’re denied—even though you’re clearly below the income threshold and desperately need coverage.

Layer 2 - Administrative Procedure: Applications must be submitted through state portals that crash, require specific browsers, don’t save progress, and time out after 15 minutes of inactivity. Documentation must be uploaded in particular formats (PDF only, under 2MB, scanned at a specific resolution). Deadlines are strict. Extensions are rare. Reapplications require starting over from scratch.

Example: Your state requires annual Medicaid recertification. You receive a notice in the mail: you have 10 days to submit updated documentation or your coverage will be terminated. The notice arrived 7 days ago (mail delay). You have 3 days to gather the pay stubs, upload them to the portal, and submit them. You try to log in—the portal is down for maintenance. You call the helpline—3-hour wait time. You finally reach someone—they cannot accept documentation by phone or email, you must use the portal. The portal comes back online 2 days later. You upload documents. Error message: “File format not supported.” You convert to PDF. Upload again. Error: “File size too large.” You compress the PDF. Upload again. Success. You check the next day—case status shows “pending.” You check again in a week—coverage terminated for “failure to provide documentation.” You submitted it on day 9. They say they didn’t receive it until day 12. You’re uninsured. Your child’s medication costs $400/month. You cannot afford it. You appeal. The appeal takes 30 days. Your child goes without medication.

Layer 3 - Algorithmic Gatekeeping: Many states now use algorithmic eligibility determination systems that to identify “high-risk” applicants.

Example: You apply for SNAP benefits. The system cross-references your application against: (1) employment databases, (2) wage records, (3) DMV records, (4) utility payment histories, (5) address verification databases, and (6) fraud detection algorithms that flag “suspicious patterns.” You’re flagged because your address doesn’t match utility records (you’re staying with family) and your employment history shows gaps (you were caregiving for a parent). Your bank account shows irregular deposits (you do gig work). The algorithm determines you’re “high risk” for fraud. Auto-denied. You never spoke to a human. The decision was made by pattern-matching software designed to minimize program costs, not to feed hungry people.

Layer 4 - Fragmented Responsibility: Who denied your benefits? The state agency? The eligibility contractor they hired (Maximus, Conduent, Accenture)? The verification vendor (LexisNexis, Equifax Workforce Solutions)? The fraud detection algorithm (built by a private tech company)? The answer is deliberately unclear. When you call, the state says they’re just following the contractor’s determination. The contractor says they’re just implementing the algorithm. The algorithm’s vendor says they’re just analyzing data. The data comes from third-party databases you’ve never heard of, aggregating information you never consented to share.

Layer 5 - Time as Enforcement: Application processing takes 30-90 days. Recertification is annual—but if you miss the deadline, you’re terminated immediately and must reapply from scratch. Appeals take 60-90 days. During that time, you’re without benefits. If you need food assistance now, if your child needs healthcare now, if you’re about to be evicted now—the system has no mechanism for urgency.

The timeline is designed to maximize attrition. People who cannot survive 90 days without assistance will give up, find other means (often harmful: payday loans, skipped medications, food insecurity), or fall through the cracks. The system counts on this. Fewer people receiving benefits = lower program costs = political success.

Layer 6 - No Human Discretion: When you call to ask why you were denied, you reach a call center worker who cannot see the algorithm’s decision logic, cannot override the determination, cannot grant exceptions, and cannot escalate beyond filing an appeal, which goes back into the same automated system that denied you in the first place. There is no caseworker with authority to look at your situation and say, “This person clearly qualifies—let’s approve them.” That discretion has been deliberately eliminated and replaced with algorithmic processing designed to say “no” as often as legally permissible.

The Output

Diffuse Harm: People who qualify for assistance but cannot navigate the process go without food, healthcare, and housing support. Children suffer from malnutrition. Families become homeless. People with disabilities lose SSI because they couldn’t complete the recertification paperwork. Elderly people lose Medicaid because they didn’t understand the new portal requirements. Immigrants avoid applying altogether because they fear data-sharing with ICE.

Concentrated Gain: Contractors billing the government to administer these programs profit from complexity—more verification steps = more billable processes. Technology vendors license their eligibility algorithms across multiple states. Fraud detection companies profit from flagging applicants (paid per flag, regardless of accuracy). And politicians claim credit for “reducing fraud and waste” when they’ve actually just made it harder for eligible people to receive help.

The Pattern Across All Sectors

Look at what repeats:

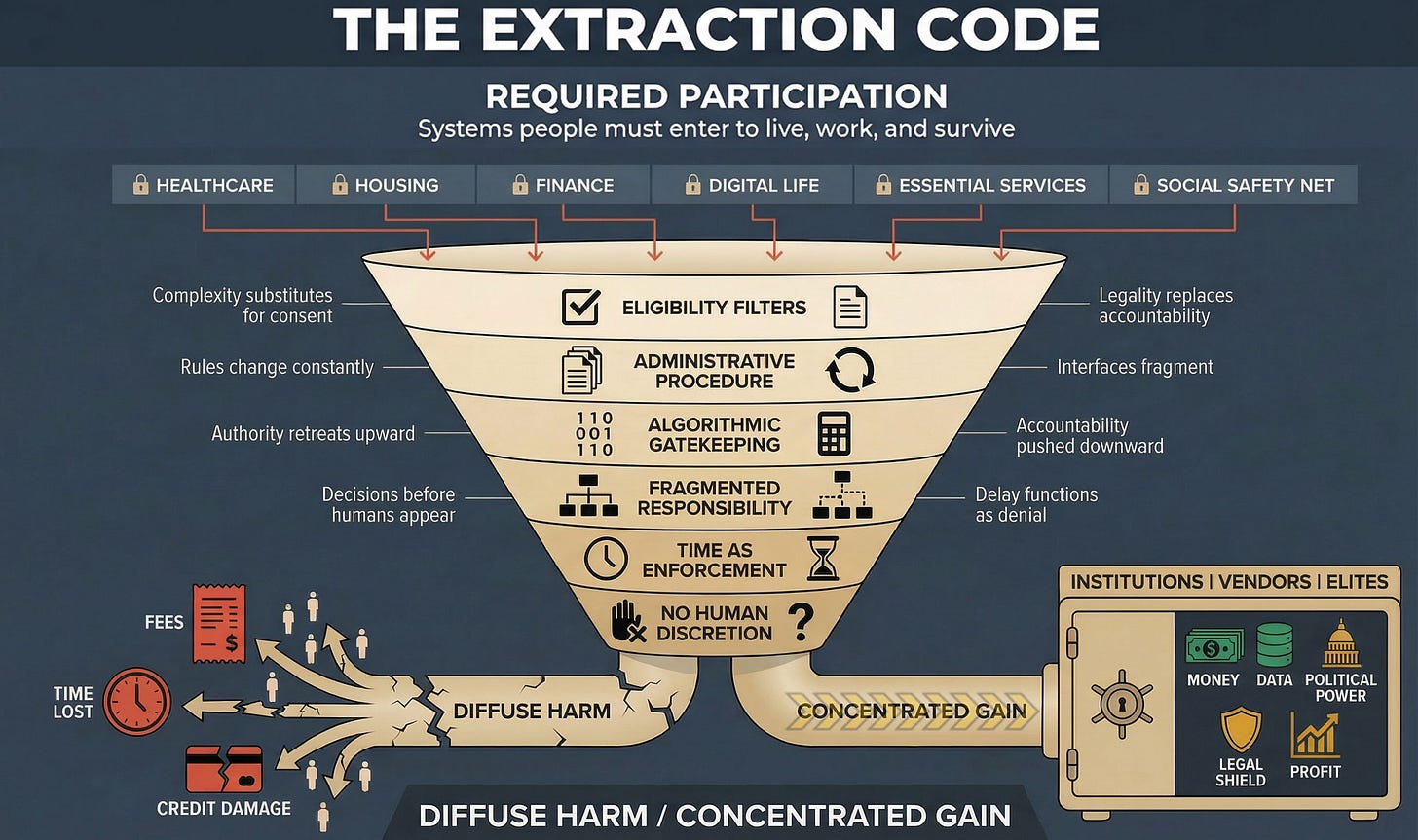

Required participation you cannot opt out of → Eligibility filters that impose complexity as gatekeeping → Administrative procedures that fragment interfaces and shift costs onto individuals → Algorithmic decisions that eliminate human judgment and operate in proprietary secrecy → Fragmented responsibility that dissolves accountability across vendors and contractors → Time delays that function as denial by attrition → Elimination of human discretion that makes appeals futile and exceptions impossible.

Every sector. Same funnel. Same outcome.

This is not a coincidence. This is not bureaucratic incompetence. This is not “the system overwhelmed by demand.”

This is design.

The same consulting firms (McKinsey, Deloitte, Accenture) sell “efficiency optimization” to hospitals, insurers, government agencies, and platforms. That optimization always means the same thing: automate decisions, fragment accountability, eliminate discretion, impose friction, maximize extraction.

The same technology vendors (Palantir, LexisNexis, FICO, CoreLogic) sell algorithmic gatekeeping systems across sectors, repackaging the same risk-scoring, fraud-detection, and eligibility-filtering tools, whether the customer is an insurer, landlord, lender, or benefits agency.

The same legal strategies (mandatory arbitration, proprietary secrecy, terms of service, contractual immunity) get copied from tech platforms to healthcare systems to government contractors, because they work. They insulate extraction from accountability.

Why This Matters

Once you see the pattern, you cannot unsee it.

The insurance claim that requires three appeals, the housing application that demands 15 documents, the credit report error that takes 90 days to dispute, the platform suspension with no human review, the benefits recertification that terminates coverage for missing a deadline you never received—they are all the same system.

Different sectors. Different language. Same infrastructure.

And that infrastructure was deliberately built, continuously refined, and legally defended by people who profit from making access difficult, accountability impossible, and resistance futile.

Exposure does not stop this machine.

Understanding the design is the first step toward dismantling it.

Next in this series: Part IV will examine Michael Cohen’s testimony as the decoder key, how his 2019 congressional hearing revealed the linguistic and operational patterns that allow extraction to operate “in code,” maintaining plausible deniability while causing systemic harm at scale.

Barking Justice Media LLC publishes the Extraction Code Monthly Pattern Recognition Report. This report is part of our paid subscriber tier.

The Extraction Code” is an analytical framework developed by Mika Douglas.

The name, definitions, terminology, and structured mechanisms associated with The Extraction Code constitute original authored work.

Commercial use, derivative frameworks, training programs, or branded applications based on this work require prior written permission.