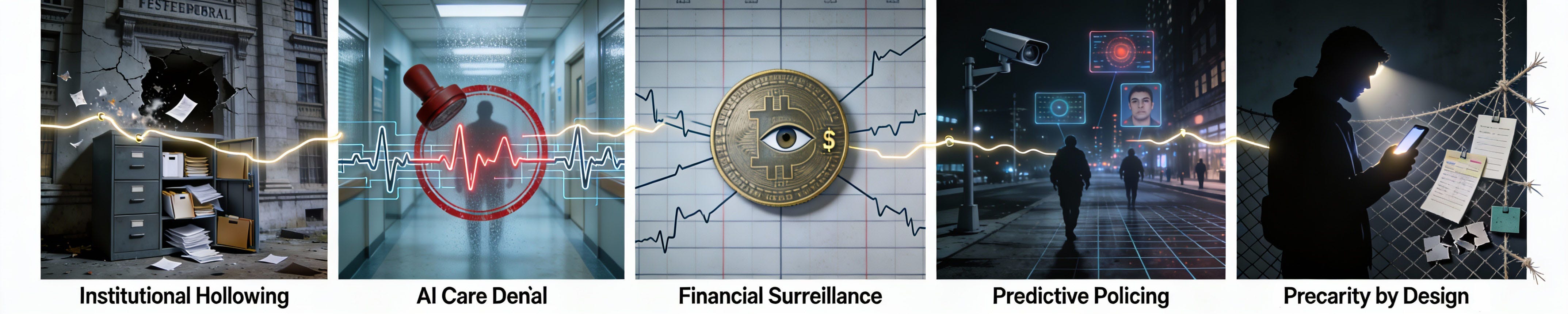

Special Report: Five Patterns That Will Define 2026

A FIRING LINE EXCLUSIVE: THE 2026 EXTRACTION OPERATING SYSTEM

The 2026 Extraction Forecast: Five Systems Going Live

In 2026, five systems begin scaling in ways that will change how everyday life works in the United States: how quickly you can get care approved, how hard it is to get a real answer from an agency, how visible your payments become, how policing tools flag people and places, and how work gets classified and controlled.

This is not a news roundup. It’s a forecast and a field manual. It translates “policy language” into the real-world signs you will actually see, and the first moves you can take before the damage shows up as fees, delays, denials, or lost leverage.

Five systems. One operating logic.

Schedule Policy/Career | Medicare prior authorization automation | regulated stablecoin payment rails | AI policing and data fusion | gig work misclassification.

When an institution says, “We are processing your request.” It often means: “The clock is running, but no one is responsible.”

FIELD NOTE: The Extraction Defense Kit

If any of these five patterns shows up in your life as a notice, a denial, a bill, a stalled dispute, or a sudden deadline, you do not need more commentary. You need first-response moves.

The Extraction Defense Kit is built for the exact moment an institution says “we are processing” while the clock keeps running. It includes plain-English scripts that force specificity on the phone, question lists that stop dead-end routing, and templates that help you document the timeline so you do not lose leverage while you wait.

If you want the tools, the Kit is in the Member Library.

PATTERN 1: The Weaponization of Institutional Incompetence (Schedule F Implementation)

By early 2026, tens of thousands of federal workers who enforce food safety, monitor environmental hazards, and regulate financial fraud will lose civil service protections and can be fired at will for any reason the President deems necessary. This isn't about efficiency; it's about systematically destroying the institutional memory that transforms data into accountability.

What’s in the Pipeline

Timeline: “Schedule Career/Policy” final rule expected Q1 2026, mass implementation throughout the year

Scale: tens of thousands of roles shifted toward at-will vulnerability

Mechanism: converting career positions to at-will employment under the label “accountability to the president.”

This is among the biggest shakeups to the federal civil service since the Pendleton Act era. Schedule F has been revived under a new name, “Schedule Policy/Career,” and it targets jobs labeled “policy-influencing,” a category defined broadly enough to reach beyond top leadership to the people who translate data into how rules are applied and enforced. If a position is moved into this schedule, the employee removal and appeal protections that normally apply to career civil servants.

What Makes This Different: From the outside, everything still looks open and operational: the website is live, the forms are online, and the phone tree answers. But the people with the expertise and authority to make the system actually work and enforce its own rules are pushed out. What’s left is a shell that can take your submission but can’t reliably resolve it, so you lose time, miss deadlines, and absorb costs and penalties while the institution keeps the appearance of legitimacy, and you have no clear path to accountability.

The Greatest Deception

The reality is systematic knowledge destruction.

Food safety inspectors, climate scientists, epidemiologists, financial regulators, and anyone whose expertise might constrain extraction can now be fired at will. When the FDA delays the food traceability rule by 30 months while hemorrhaging inspection capacity, this is serving its intended purpose.

The government is being hollowed out while maintaining the appearance of operation. Forms still get filed. Agencies still have websites. But the institutional memory that transforms data into accountability is being systematically purged.

The Greatest Potential Harm

Immediate

Food and product safety enforcement weakens as inspection capacity declines (FSMA traceability enforcement delay, foreign inspection drop, USDA resignations, and FDA budget decrease).

Regulatory capture becomes structural, not incidental

Expertise loss turns rulemaking into theater and enforcement into selective silence

Cascading

When the next crisis hits (pandemic, contamination, fraud wave), the coordination knowledge is gone

Manufactured failure becomes justification for “emergency measures” that quietly become permanent

Accountability dissolves because the people who can prove harm were pushed out first

How We Resist

Litigation and injunction strategy: force transparency on conversion criteria and implementation timelines.

Strategic documentation: record arbitrary “policy” designations to build the evidentiary trail.

State coordination: Attorneys general and interstate compacts can slow implementation and build parallel enforcement pathways.

Most harm does not arrive as a headline. It arrives as “standard process,” “policy update,” “review,” “optimization,” and “compliance.” The words sound neutral. The impact is not.Pattern 1: Directly cited evidence:

Government Executive report (Dec 2025): Schedule Career/Policy final rule circulated to agencies, cites “accountability to the president” as grounds for stripping protections

Office of Management and Budget/Office of Personnel Management (OMB/OPM) memo: Agencies submitted employee conversion lists by specific deadlines (March-September 2025)

Food Safety timeline (Food Safety.com): 15,000+ USDA resignations, hundreds of food safety inspectors, FDA foreign inspections down 30%, FSMA 204 delayed 30 months

Specific budget cuts: FDA Human Foods Program budget decreased $ by $271M

Pattern recognition: The connection between mass workforce reduction + compliance deadline delays + inspection capacity collapse = manufactured regulatory failure.

[1] The Pendleton Civil Service Reform Act (1883) replaced patronage-based hiring with merit-based civil service, establishing that federal employees would be selected through competitive examination rather than political loyalty and could not be dismissed arbitrarily for partisan reasons. The Act created structural barriers between political power and administrative function—barriers that modern extraction infrastructure systematically circumvents through outsourcing, contracting, and algorithmic delegation.

PATTERN 2: AI-Mediated Care Denial (Medicare Prior Authorization Automation)

Starting January 2026, AI algorithms will screen prior authorization requests for millions of Medicare beneficiaries, and data show AI is 16 times more likely to deny care. What insurers call "efficiency" is actually the automation of medical abandonment: 29% of physicians report that prior authorization has already led to serious adverse events, hospitalizations, and permanent harm.

What’s in the Pipeline

Timeline: WISeR (Wasteful and Inappropriate Service Reduction) pilot launches January 2026. Scale: 6 states (Arizona, New Jersey, Ohio, Oklahoma, Texas, Washington), 17 procedures, 6-year timeline Mechanism: AI algorithms screen prior authorization requests; clinicians retain “final say” on denials only

Simultaneously, 3 out of 4 private insurers already use AI for prior authorization approvals, with 8-12% using it for denials. The GENIUS Act requires federal regulators to finalize implementation rules by July 2026.

The Greatest Deception

The deception operates on three levels:

Level 1: “AI improves efficiency”

Reality: One Senate investigation found post-acute care denials far outpaced overall denials at major insurers, including a more-than-16x ratio at Humana.

Level 2: “Clinicians make final decisions”

Reality: AI flags requests for denial; clinicians rubber-stamp. When 90% of denials are automated batch decisions “with little or no human review” (AMA), the “human oversight” is theater. These figures come from the AMA’s annual nationwide physician survey on prior authorization.

Level 3: “This reduces waste.”

Reality: 29% of physicians report that prior authorization led to serious adverse events, hospitalizations, life-threatening situations, and permanent harm. The “waste” being reduced is medically necessary care.

The Greatest Potential Harm

Systemic:

94% of physicians report that prior authorization negatively impacts clinical outcomes

82% report patients abandon treatment due to authorization delays

89% report that it increases physician burnout (40% employ staff solely for authorization work)

The Extraction Mechanism: Prior authorization isn’t about medical necessity; it’s about creating friction. Each denied claim, each delayed approval, each abandoned treatment represents revenue extracted from care delivery and redirected to shareholder returns.

When Medicare adopts this model, it normalizes algorithmic denial across the entire healthcare system. Private insurers can point to federal precedent. “If Medicare requires AI authorization, clearly it’s best practice.”

The Constitutional Dimension: When the Centers for Medicare & Medicaid Services (CMS) awards six-year contracts to third-party AI vendors to make Medicare coverage decisions, governmental authority to determine access to care is being delegated to proprietary algorithms. Citizens have no right to know why they’re denied, no ability to audit the logic, no mechanism to challenge systematically biased systems.

How We Prepare

For Patients:

Document Everything: Keep detailed records of authorization requests, denials, and delays. Create a timeline showing the harm caused by delays.

Appeal Systematically: Even if exhausting. Each appeal creates evidence of systemic problems.

Connect Dots: If an authorization delay leads to emergency care, explicitly document in the appeal that the denial created the emergency.

For Providers:

Track Denial Patterns: Which diagnoses get auto-denied? Which procedures are consistently delayed? Document algorithmic bias.

Transparency Demands: Request an explanation of the AI decision logic. Force acknowledgment of proprietary black boxes.

Coordinate Response: Medical societies should aggregate denial data, publish patterns, and create a public record of systematic harm.

How We Resist

Legal Challenges:

Administrative Procedure Act (APA) [2] violations: Delegating governmental decision-making to proprietary algorithms without transparency

Due process claims: Patients denied care via inscrutable AI with no meaningful appeal

Equal protection: If AI systematically denies care to certain populations

State Action: State insurance commissioners can impose transparency requirements for AI prior authorization, require disclosure of denial algorithms, mandate human-review thresholds, and establish patients’ rights to algorithmic explanation.

Federal Pressure: The CMS Interoperability rule (effective January 2026) requires a 72-hour response for urgent requests, 7 days for standard. Only 12% of providers currently receive decisions in this timeline. Systematic documentation of non-compliance creates enforcement leverage.

The Decoder: When insurers say “AI streamlines authorization,” translate to “AI systematically denies more care faster.” When they claim “clinicians make final decisions,” understand “clinicians approve AI denials without meaningful review.”

Pattern 2: Directly cited evidence:

Medicare WISeR pilot: January 2026 start confirmed, 6 states named, 17 procedures specified, 6-year timeline

CMS Interoperability Rule: January 1, 2026, effective date, 72-hour/7-day response requirements

National survey data: 75% of insurers use AI for approvals, 8-12% for denials

AMA physician survey: 94% report negative clinical outcomes, 29% report serious adverse events, 82% report patients abandon treatment

Senate committee data: AI denials 16x higher than human review

Multiple medical journals/trade publications confirm these figures

Pattern recognition: The pattern I identified—”efficiency” rhetoric masking systematic denial—is supported by the 16x denial rate and the 94% negative outcome reporting. This is empirical, not interpretive

[2] APA = Administrative Procedure Act (1946). The Administrative Procedure Act governs how federal agencies create and implement regulations.

PATTERN 3: Financial Surveillance Infrastructure (Stablecoin Regulation via GENIUS Act)

By mid-2026, federal regulators will finalize rules creating a “regulated stablecoin” system that combines Know Your Customer identity checks, blockchain transaction transparency, and Bank Secrecy Act reporting, building a payment infrastructure where every dollar you spend leaves a permanent, analyzable trail. The “100% reserve backing” promise means bank deposits are protected, meaning your payment system could collapse if a bank fails, while your transactions remain forever visible.

What’s in the Pipeline

By July 18, 2026: Federal regulators must issue key final rules to implement the GENIUS Act.

Effective date: Most core requirements take effect on the earlier of:

January 18, 2027 (18 months after enactment), or

120 days after regulators issue final implementing rules.

That means late 2026 is possible if final rules land by mid-2026.

All through 2026: Firms prepare applications, regulators stand up licensing and supervision, and states seek “substantially similar” certification for state regimes.

Scale: A national framework for dollar-denominated “payment stablecoins”, potentially supporting huge volumes of issuance and payments.

Mechanism: A licensing regime for “permitted payment stablecoin issuers,” including:

1:1 reserve backing requirements (one dollar of permitted reserves for each one dollar of stablecoins).

Monthly public reserve composition disclosures (often described as monthly reports or attestations).

Treatment of issuers as financial institutions under the Bank Secrecy Act (Bank Secrecy Act is the main U.S. anti-money-laundering law), which brings customer identification and monitoring duties.

A path for states to run their own issuer regimes if their rules are certified as “substantially similar” to the federal framework.

The Greatest Deception

The GENIUS Act is often framed as “innovation-friendly stablecoin clarity.” The practical outcome can also be read as a new payments infrastructure with built-in compliance and traceability, where who is allowed to operate is tightly controlled.

Deception Layer 1: “This creates clarity for digital payments.”

Reality: It creates a regulated stablecoin payment rail where:

Issuers are treated like Bank Secrecy Act financial institutions, which means they must conduct Know Your Customer (KYC) identity checks and maintain ongoing monitoring.

Many stablecoins move on public blockchains. If so, the transaction flow can be visible as an address-to-address graph. When combined with KYC at the on- and off-ramps, that visibility can become identification.

Compliance and reporting obligations raise fixed costs, which can favor large incumbents over smaller entrants. (This is a structural effect of regulated licensing, not a claim of intent.)

Deception Layer 2: “100% reserve backing protects consumers.”

Reality: The law allows several “permitted reserve” categories, including bank deposits. Bank deposits can be safe, but deposit insurance has limits. If large reserve balances sit at a bank and the bank fails, amounts above insurance limits can be exposed to loss or delayed recovery.

Deception Layer 3: “This prevents illicit finance.”

Reality: Anti-money-laundering requirements can reduce abuse, but they also expand identity-linked monitoring of payment flows:

Issuers are pulled into Bank Secrecy Act compliance, including customer identification and due diligence.

The law also pushes frequent reserve transparency (monthly public reserve composition disclosures), which increases ongoing reporting and audit pressure.

The Greatest Potential Harm

1) Always-on transaction trails (a “payment panopticon” effect)

If stablecoin activity runs on public blockchains, transaction graphs can be analyzed at scale. Even when addresses are pseudonymous, patterns such as timing, amounts, counterparties, and network links can be highly revealing. When tied to KYC identity checks at major gateways, this can enable:

Rich transaction mapping

AI-driven pattern profiling

Long-lived records that are hard to erase once published to a ledger

2) Financial fragility through reserve design choices

If significant reserves are held as bank deposits, stress can propagate in both directions:

A bank scare can create redemption pressure on stablecoins.

A stablecoin redemption wave can force rapid withdrawals from banks.

This is a plausible channel of contagion because stablecoins can be moved quickly and redeemed at scale, while bank liquidity is not infinite. (This is a risk argument, not a guarantee.)

3) Consolidation as governance

Two places the law explicitly raises the bar:

State frameworks must be certified as “substantially similar,” and the Stablecoin Certification Review Committee (SCRC) votes on those certifications.

A public non-financial services company generally cannot issue a payment stablecoin without the unanimous approval of the SCRC.

Net effect: fewer issuers may be able to clear the licensing and governance hurdles, concentrating stablecoin issuance in a smaller set of regulated entities.

The Decoder:

When you hear “payment innovation” or “regulatory clarity,” translate it into: a licensed payment rail with identity checks, reporting duties, and design choices that shape who can participate and how observable transactions become

How We Prepare

Financial Literacy: Understand that stablecoins are NOT equivalent to cash. They are:

Corporate liabilities, not government obligations

Subject to counterparty risk (issuer solvency)

Potentially backed by risky, illiquid assets despite “100% reserve” claims.

Creating permanent surveillance records

Alternative Systems: Support development of truly decentralized payment systems, cooperative financial infrastructure, mutual credit networks, time banks—systems that don’t require corporate issuers or create surveillance records.

Documentation Infrastructure: Create public databases tracking:

Which institutions apply for stablecoin licenses

What reserve compositions do they disclose

Which applications SCRC approves/denies and why

Any discrepancies between disclosed reserves and actual holdings

How We Resist

Transparency Demands: Regulators must publish full SCRC deliberations, application criteria, and approval/denial rationales. FOIA every aspect of implementation. Force a public record of how consolidation proceeds.

State Alternatives: States can create truly safe stablecoin frameworks: 100% backing in actual Treasuries (not repos), no bank deposit exposure, stronger consumer protections, maximum transparency.

Alternative Infrastructure: Don’t wait for corporate stablecoins. Build mutual aid payment systems, community currencies, and cooperative banking alternatives.

The Decoder: When you hear “innovation-friendly regulation,” translate to “surveillance infrastructure with controlled access.” When you hear “100% reserves,” understand “potentially backed by risky bank deposits.” When you hear “licensed issuers,” recognize “consolidation in extraction-aligned institutions.”

If prior authorization delay or denial is in your life this year, the Extraction Defense Kit includes the call script, the appeal timeline log, and the exact “what to ask for” checklist.

Pattern 3: Directly cited evidence:

GENIUS Act: Enacted July 2025, July 18, 2026 regulatory deadline explicit in statute

FDIC proposed rulemaking: December 2025, specific application procedures

Federal Reserve/Brookings/Richmond Fed analyses: Confirming reserve composition rules (including uninsured deposits), BSA/AML requirements, identity verification mandates

Text of actual legislation available via Congress.gov

Pattern recognition: The surveillance capability analysis comes from technical reality of blockchain transparency + statutory KYC requirements + BSA reporting obligations. These aren’t assumptions—they’re how the technology and law actually work.

PATTERN 4: Algorithmic Social Control (AI Predictive Policing Expansion)

Throughout 2026, police departments are deploying AI systems that claim to predict crime but actually create self-fulfilling prophecy loops: algorithms trained on historical over-policing in Black neighborhoods predict more crime in those same areas, justify more police presence, generate more arrests, and "confirm" the AI's bias. When attending a protest gets you flagged in risk-scoring systems and your social media posts contribute to threat assessments, democratic participation becomes dangerous.

What’s in the Pipeline

Timeline: Aggressive deployment throughout 2026. Scale: “Smart cities” data fusion systems, social media monitoring, sentiment analysis, predictive risk scoring. Mechanism: AI analyzes crime data, social media, surveillance footage, and biometric data to predict “high-risk” individuals and locations

Police departments globally are rapidly integrating AI for:

Real-time crime prediction and “hotspot” identification

Social media monitoring with sentiment analysis and risk scoring

Video analytics identifying “suspicious activity”

Facial recognition integrated with criminal databases

“Brain fingerprinting” systems (UAE already prosecuting based on this)

The U.S. is accelerating adoption, with ICE “vacuuming up data from a huge range of sources” for immigration enforcement. At the same time, “unvetted and unregulated technologies are being built into surveillance and policing.”

The Greatest Deception

“AI makes policing objective and efficient.”

Reality: AI systems trained on historical arrest data don’t predict crime; they indicate where police have made arrests. When those arrests reflect decades of over-policing in Black communities, AI doesn’t eliminate bias. It automates and amplifies it.

The Senate investigation found: “mounting evidence indicates that predictive policing technologies do not reduce crime...instead, they worsen the unequal treatment of Americans of color by law enforcement.”

“Humans make the final decisions.”

Reality: When AI generates “risk scores” or “hotspot maps,” human officers act on those recommendations. The AI’s probabilistic output is treated as a factual prediction. Officers don’t question the algorithm—they execute its directives.

“This improves public safety.”

Reality: The UAE’s system claims 68% accuracy at predicting crimes. That means 32% of the time, it’s wrong. Those false positives are human beings subjected to heightened surveillance, increased stops, and more arrests—based on algorithmic guesswork.

The Greatest Potential Harm

Self-Fulfilling Prophecy Loops:

AI predicts crime in neighborhood X (based on historical over-policing)

Police deploy more resources to neighborhood X.

More policing creates more arrests in neighborhood X.

More arrests “confirm” the AI’s prediction.

AI increases the prediction for neighborhood X.

Loop accelerates

This creates permanent surveillance zones where entire communities live under algorithmic suspicion, with no mechanism to break the cycle.

Social Credit Systems via Function Creep: Systems deployed for “public safety” expand to:

Monitoring protests (tracking “individuals who pose a supposed threat to public safety or are linked to them”)

Social media sentiment analysis (”determining users’ sentiments, assigning risk scores, mapping online relationships”)

Behavioral prediction (”whether people have attended the same protests”)

The infrastructure for comprehensive social control is being built under the guise of crime prevention.

Chilling Effects on Democracy: When attending a protest gets you flagged in police AI systems, when social media posts contribute to risk scores, when your “online relationships” become evidence of threat, democratic participation becomes dangerous.

How We Prepare

Know Your Rights: Document every police interaction. If stopped based on “predictive” criteria, demand to know the basis. Create paper trails showing you were targeted algorithmically, not based on actual suspicious behavior.

Community Defense Networks: Organize rapid-response systems for neighborhoods within AI-designated “high-risk” zones. Document patterns of over-policing and create evidence of bias in deployment.

Digital Hygiene: Assume social media is monitored. Assume sentiment analysis is applied to posts. Assume relationship mapping connects your networks. Act accordingly for high-risk activities (organizing, protest, advocacy).

How We Resist

Ban Biased Data: Advocate for legislation prohibiting the use of historical arrest data known to contain racial bias. If AI can’t be trained on biased data, it can’t produce biased outputs.

Algorithmic Transparency Requirements: Demand that cities publish:

Which AI systems are deployed

What data do they use?

How risk scores are calculated

Accuracy rates and false favorable rates by demographic

Appeals processes for individuals flagged by systems

Community Oversight: Cities like NYC have mandated disclosure of surveillance tools before acquisition. Require community approval, independent audits, sunset provisions, and regular public reporting.

Litigation: Challenge systems under:

Equal protection (discriminatory impact on communities of color)

Fourth Amendment (suspicionless surveillance)

First Amendment (chilling effect on protest/speech)

The Decoder: When you hear “data-driven policing,” translate to “automation of historical bias.” When you hear “hotspot prediction,” understand “over-policing certain neighborhoods based on past over-policing.” When you hear “risk assessment,” recognize “probabilistic suspicion with no accountability.”

PATTERN 4: Directly cited evidence:

NAACP policy brief: Mounting evidence AI doesn’t reduce crime, increases racial bias

Senate letter to DOJ: Same finding

Brennan Center research: Data fusion systems, social media monitoring, risk scoring

Specific deployments: UAE 68% accuracy claims, Dubai systems, China’s IJOP, Japan’s Crime Nabi

Centre for Democracy & Technology: “Reckless deployment,” lack of efficacy proof

Police Chief Magazine, Deloitte, and multiple sources confirm rapid expansion

Pattern recognition: The self-fulfilling prophecy loop is a documented mechanism: AI trained on biased arrest data → predicts more crime in over-policed areas → more deployment → more arrests → confirms prediction. This is described in the Brennan Center and the NAACP reports as the core problem.

PATTERN 5: Precarity by Design (Gig Worker Misclassification Acceleration)

Throughout 2026, platform companies are fighting to establish legal frameworks that keep workers classified as “independent contractors” subject to complete corporate control, algorithmic assignment, unilateral pay rates, opaque performance metrics, and no-process termination, while bearing all operational costs and income volatility without any labor protections. When corporations spend $200+ million to pass Prop 22, that’s not workers choosing flexibility, that’s extraction industries buying their preferred labor classification.

What’s in the Pipeline

Timeline: Throughout 2026, aggressive expansion and international spread.

Scale:

EU Platform Work Directive: Member states must implement by December 2026

DOL’s “economic reality” test: Active enforcement accelerating

California AB5 and similar state laws: Ongoing litigation and compliance battles

Mechanism: Legal frameworks creating middle categories between employee and contractor, extracting labor value while avoiding obligations

The Greatest Deception

“The gig economy provides flexibility.”

Reality: The “flexibility” is unidirectional. Workers can’t negotiate rates, can’t refuse assignments without algorithmic punishment, can’t access benefits, can’t organize.

Companies maintain total control over:

Which workers get which jobs (algorithmic assignment)

Pay rates (unilateral determination)

Performance standards (opaque metrics)

Deactivation/termination (no due process)

Meanwhile, workers bear:

All operational costs (vehicle, maintenance, insurance)

All income volatility (no minimum, no guaranteed hours)

All risk (no unemployment, no workers’ comp, no health coverage)

“Independent contractors choose to work this way.”

Reality: When Proposition 22 required $200+ million in corporate spending to pass (funded by Uber, Lyft, DoorDash, Instacart), that’s not the workers choosing. That’s the extraction industry buying its preferred labor classification.

“This is the future of work.”

Reality: This is an extraction optimizing its cost structure by making labor precarious, atomized, and powerless.

The Greatest Potential Harm

Systematic Wealth Transfer:

Uber/Lyft drivers earn below minimum wage after expenses.

DoorDash settled $100 million misclassification case

Microsoft paid $97 million for contractor misclassification.

These aren’t accidents—they’re the business model.

Erosion of Labor Standards: When major companies successfully create “third category” workers (neither employee nor contractor), they establish precedent for broader application:

Warehouse workers

Home healthcare aides

Delivery drivers

Food service workers

The gig model metastasizes until the default employment relationship is precarious.

Algorithmic Management Without Accountability: Workers are managed by opaque algorithms that:

Assign tasks

Monitor performance

Determine pay

Decide termination

There’s no human to negotiate with, no transparency into decision logic, and no appeals process. You’re managed by code you can’t see, based on metrics you don’t know, subject to decisions you can’t challenge.

Collective Action Suppression: Gig workers can’t organize. They’re not employees, so they’re not protected under the NLRA. They’re “independent contractors,” so organizing is “price fixing.” They’re atomized, surveilled, and replaceable.

How We Prepare

Documentation Protocols: Workers should systematically document:

Degree of company control (assigned routes, mandatory acceptance rates, discipline for refusals)

Integration into business (is this work core to company operations?)

Economic dependence (percentage of income from a single platform)

Investment requirements (equipment, insurance, maintenance costs)

This evidence supports reclassification claims and litigation.

Collective Documentation: Create worker-led databases tracking:

True hourly rates after all expenses

Frequency of deactivation and stated reasons

Algorithmic assignment patterns

Changes to pay structures and notification (or lack thereof)

Alternative Infrastructure: Support platform cooperatives, worker-owned alternatives, collective bargaining, even for contractors.

How We Resist

Strategic Litigation:

State-by-state California Assembly Bill (AB5) enforcement

Federal Department of Labor (DOL) complaints

Class actions for misclassification

Unemployment insurance claims (forcing adjudication of employee status)

Legislative Campaigns: Advocate for:

Federal legislation protecting gig worker rights

Platform accountability requirements

Algorithmic transparency mandates

Direct Action: Workers coordinating strikes, slowdowns, and refusal campaigns. Even without traditional union protections, collective withdrawal of labor creates leverage.

The Decoder: When companies say “independent contractor,” translate to “extraction of labor value without obligations.” When they say “flexibility,” understand “unilateral control without accountability.” When they say “entrepreneurship,” recognize “bearing all risk with no power.”

PATTERN 5: Directly cited evidence:

EU Platform Work Directive: December 2026 implementation deadline confirmed

DOL economic reality test: March 2024 effective date, six-factor framework detailed

California AB5: Actual ABC test from statute, Prop 22 exemption confirmed

Settlement amounts: DoorDash $100M, Microsoft $97M (documented cases)

Remote.com, multiple law firm analyses confirming global spread

Pattern recognition: The “precarity by design” analysis connects documented business models (contractors bearing all costs/risks while companies maintain control) with legal frameworks explicitly created to enable this. The Prop 22 spending of $200M+ is public record.

Synthesis: The 2026 Extraction Operating System

These aren’t five separate patterns—they’re integrated components of a unified extraction system.

The foundation is institutional sabotage. Schedule F strips away the expertise that could constrain what comes next: food safety inspectors who would catch contamination, financial regulators who would spot fraud, scientists who would document harm. Institutions still exist but can no longer function—extraction operates behind a facade of legitimacy.

With regulatory capacity dismantled, algorithmic extraction accelerates. AI systems deny medically necessary healthcare 16 times more often than human review. Each denied claim, each treatment delay, each abandoned therapy represents value transferred from health outcomes to shareholder returns. When Medicare adopts this model, it normalizes the algorithmic abandonment system-wide.

Financial surveillance infrastructure makes resistance visible before it organizes. The GENIUS Act establishes payment systems that create permanent, analyzable records linking identity to spending. Stablecoin issuers conduct Know Your Customer checks; blockchain transactions leave public trails. The infrastructure exists to map who funds what, who associates with whom, and who participates in which movements.

Predictive policing manages populations made precarious by the other patterns. AI trained on historical over-policing creates self-fulfilling loops: algorithms predict crime in already over-policed neighborhoods, justify increased deployment, generate more arrests, and “confirm” their accuracy. Workers organizing get flagged in risk-scoring systems. Protesters accumulate threat assessments. Collective action becomes algorithmically dangerous.

The workforce is restructured to eliminate resistance capacity. Gig-economy expansion creates workers who bear all costs. At the same time, companies maintain total control, workers who can’t organize because they’re “independent contractors” can’t strike without employment protections, and can’t bargain because they’re atomized and surveilled. One algorithm-generated poor performance score away from losing income, you don’t challenge the system.

The system is self-reinforcing:

Weakened institutions can’t regulate AI systems. Financial surveillance identifies resistance before it organizes. Predictive policing suppresses collective action. Precarious workers can’t afford to resist. Care denial ensures medical bankruptcy maintains precarity.

The genius is linguistic. Every mechanism operates through euphemism: “accountability” for purging expertise, “efficiency” for denying care, “innovation” for surveillance, “public safety” for automated bias, “flexibility” for exploitation. The language sounds neutral. The impact is a systematic transfer of wealth, power, and freedom.

This is extraction as infrastructure, not episodic theft, but systematic architecture that makes extraction the default output. The food doesn’t get inspected. The care doesn’t get approved. The transaction doesn’t stay private. The neighborhood doesn’t escape over-policing. The worker doesn’t gain leverage.

Because each pattern is legally structured, algorithmically mediated, and linguistically obscured, accountability dissolves. The harm is systemic, the mechanism automated, the code—both linguistic and algorithmic, makes extraction look like a process.

By year’s end, these won’t be pilot programs. They’ll be operational infrastructure. The extraction operating system will be running. Unless we recognize the architecture, see how patterns connect, understand how they reinforce each other, name mechanisms before they’re normalized—we’ll navigate five separate crises without recognizing one unified system.

The code is written. The implementation timeline is set. The resistance window is now.

The extraction code for 2026 is written in five languages: administrative law, algorithmic logic, financial regulation, predictive analytics, and employment classification.

NEXT STEP: If you want the first-response playbook

This forecast helps you see the pattern. The Extraction Defense Kit helps you act when it hits your mailbox, your portal, your paycheck, or your care.

Inside the Kit:

The “force specificity” phone scripts (so you can get names, deadlines, and next steps on record)

The timeline builder (what to save, what to screenshot, what to log, and why it matters)

Pattern-specific templates (appeal language, documentation prompts, and escalation steps)

The Kit is in the Member Library.

Our job is to become fluent and then rewrite the system.

Thank you very very much. Clearly presented, it is extremely useful, and complete. As a lawyer, the organization of this article is extraordinarily clear. As a student in Duke’s Sanford Public Policy Institute, this would have been a MODEL predictive memorandum.

Brilliant breakdown of the extraction architecture here. The AI prior authorization thing is genuinely scary becuase my dad had a procedure delayed last year and it nearly became an emergency situation. Nobody at the insurance company could explain why the AI flagged it for denial, just kept saying it was 'under review'. I dunno if most people realize these algorithms are trained on historical denial patterns not actual medical neccessity.